1. CPU Utilization:

a. # sar –u

b. # mpstat

2. Memory Utilization:

a. top

b. prstat

c. vmstat

d. swap –l

3. Disk Utilization:

a. df –h

b. iostat –En

4. Network Status:

a. netstat –i

b. netstat –a

c. netstat –m

5. Solaris Volume Manager/SVM:

a. metastat –i

b. metastat –p

c. metadb –i

d. cat /etc/lvm/md.tab

e. metastat | grep –i replace

6. Syslog:

a. sulog

b. login log

c. last

d. /var/adm/message

7. User session:

a. w

b. who

c. users

FTP usage:

a. ftpwho

b. ftpcount

Wednesday, September 8, 2010

Wednesday, July 21, 2010

/etc/hosts.equiv and $HOME/.rhosts:

The first file read by the local host is its /etc/passwd file. An entry for that particular user in this file enables that user to log in to the local host from a remote system. If a password is associated with that account, then the remote user is required to supply this password at log in to gain system access.

If there is no entry in the local host’s /etc/password file for the remote user, access is denied.

/etc/hosts.quiv and $HOME/.rhosts files bypass this standard password-based authentication to determine if a remote user is allowed to access the local host, with the identity of a local user.

These files provide a remote authentication procedure to make that determination.

This procedure first checks the /etc/hosts.eqiv file and then checks the $HOME/.rhosts file in the home directory of the local user who is requesting access. The information contained in these two file (if they exist) determines if remote access is granted or denied.

Difference between /etc/hosts.equiv and $HOME/.rhosts

/etc/hosts.equiv

- The information in this file applies to the entire system.

$HOME/.rhosts

- The information in this file applies to the individual user.

- In other words, individual users can maintain their own $HOME/.rhosts files in their directories.

Entries in the /etc/hosts.equiv and $HOME/.rhosts file:

Both the files have the same format, the same entries in each file, but have different effects.

Both the files are formatted as a list of one-line entries, which can contain the following types of entries:

hostname

hostname username

+

Note: The host names in the above files must be the official name of the host, not one of its alias names.

/etc/hosts.equiv file rules:

- For regular users, the /etc/hosts.equiv file identifies remote hosts and remote users who are considered to be trusted.

- The file is not checked at all if the remote user requesting local access is the root user.

- If the file contains the host name of a remote host, then all regular users of that remote host are trusted and do not need to supply a password to log in to the local host. This is provided so that each remote user is known to the local host by having an entry in the local /etc/passwd file; otherwise, access is denied.

- This file will not exist by default. It must be created if trusted remote user access is required on the local host.

$HOME/.rhosts file rules:

- Applies to a specific user

- All users, including root user can create and maintain their own .rhosts files in their home directories.

- This file does not exist by default. Can be created it in the user’s home directory.

If there is no entry in the local host’s /etc/password file for the remote user, access is denied.

/etc/hosts.quiv and $HOME/.rhosts files bypass this standard password-based authentication to determine if a remote user is allowed to access the local host, with the identity of a local user.

These files provide a remote authentication procedure to make that determination.

This procedure first checks the /etc/hosts.eqiv file and then checks the $HOME/.rhosts file in the home directory of the local user who is requesting access. The information contained in these two file (if they exist) determines if remote access is granted or denied.

Difference between /etc/hosts.equiv and $HOME/.rhosts

/etc/hosts.equiv

- The information in this file applies to the entire system.

$HOME/.rhosts

- The information in this file applies to the individual user.

- In other words, individual users can maintain their own $HOME/.rhosts files in their directories.

Entries in the /etc/hosts.equiv and $HOME/.rhosts file:

Both the files have the same format, the same entries in each file, but have different effects.

Both the files are formatted as a list of one-line entries, which can contain the following types of entries:

hostname

hostname username

+

Note: The host names in the above files must be the official name of the host, not one of its alias names.

/etc/hosts.equiv file rules:

- For regular users, the /etc/hosts.equiv file identifies remote hosts and remote users who are considered to be trusted.

- The file is not checked at all if the remote user requesting local access is the root user.

- If the file contains the host name of a remote host, then all regular users of that remote host are trusted and do not need to supply a password to log in to the local host. This is provided so that each remote user is known to the local host by having an entry in the local /etc/passwd file; otherwise, access is denied.

- This file will not exist by default. It must be created if trusted remote user access is required on the local host.

$HOME/.rhosts file rules:

- Applies to a specific user

- All users, including root user can create and maintain their own .rhosts files in their home directories.

- This file does not exist by default. Can be created it in the user’s home directory.

Plex

PLEX:

1. Voulume manager uses sub-disks to build virtual objects called

PLEXES.

2. Is a structured or ordered collection on sub-disks from one or more vmdisk.

3. Cannot be shared by 2 volumes.

4. Maximum number of plexes per volumes is 32

5. Between 2 plexes of same volume mirroring occurs by default.

6. Can have minimum one sub-disk and maximum of 4096 sub-disks

7. 3 types of plexes

a. Complete plex: holds a complete copy of a volume

b. Log plex: dedicated to logging

c. Sparse plex: 1. which is not a compelete copy of the volume

2. Sparse plexes are not used in newer

versions fo voulme manager.

8. Can organixe data on sub-disks to form a plex by using the following

a. Concatenation

b. Striping

c. Mirroring

d. Striping with parity

Plex states:

1. If a disk with a particular plex located on it begins to fail, we can temporarily disable the plex.

2. Vxvm utilities automatically maintains the plex state.

Vxvm utilities uses the plex states for the following

a. Indicate whether volume contents have been initialized to a known state

b. Determine if a plex contains a valid copy (mirror) of the volume contents

c. Track whether a plex was in active use at the time of a system failure

d. Monitor operations of plex.

3. States of plex:

There are 15 states of plex available

a. Active plex state:

- On a system running level, ACTIVE should be the most common state, we can observe for any volume plex.

- Can be in the ACTIVE state by 2 ways

i. When the volume is started and the plex fully participates in normal volume I/O, the plex contents change as the contents of the volume change.

ii. When the volume is stopped as a result of system crash and the plex is ACTIVE at the moment of crash.

- In the later case, a system failure can leave plex contents in a consistent state. When a volume is started, Vxvm does the recovery action to guarantee that the contents of the plexes are marked as ACTIVE are made identical.

b. Clean plex state:

- Known to contain a consistent copy (mirror) of the volume contents & an operation has disable the volume.

c. DCOSNP plex state:

- Indicates that the DCOSNP (Data Change Object SNaPshot plex) attached to a volume can be used by a snapshot plex to create a DCO volume during snapshot operation.

d. Empty plex:

- Indicates that this plex are not initialized.

e. IOFAIL plex:

- Occurs when vxconfigd daemon detects an un correctable I/O error.

- It is likely that one/more of the disks associated with the plex to be replaced.

f. Log plex:

- The state of DRL – Dirty Region Log is always set to log plex.

g. Offline plex:

- Although the detached plex maintains its association with the volume, changes to the volume do not update the offline plex. The plex is not updated until the plex is put alive and re-attached.

h. SNAPATT plex:

- Indicates a snapshot plex that is being attached by snapshot (beginning) operation.

- Note: If the system fails before the attach completes, the plex & all of its sub-disks are removed.

i. SNAPDIS plex:

- Indicates a snapshot plex is fully attached.

j. SNAPDONE plex:

- Once the snapshot is completely achieved.

k. SNAPTMp plex:

- When a snapshot is being prepared on a volume.

l. STALE:

- If there is possibility that a plex does not have the complete & current volume contents, that plex is placed in STALE state.

- If an I/O error occurs on a plex, the kernel stops using & updating the contents of that plex and the state is STALE.

- Note 1: # vxplex att

Operation recovers the contents of a STALE plex from an ACTIVE plex.

- Note 2: # vxplex det

Root-user can executes the above command to bring the plex to STALE state.

m. TEMP plex:

- Indicates that the operation is incomplete.

n. TEMPRM plex:

- Similar to TEMP plex, but except that the completion of the operation, TEMPRM plex is removed.

o. TEMPRMSD plex:

- Occurs when attaching new data plexes to a volume.

- If the sync operation does not complete, the plex and its sub-disks are removed.

Plex condition flags:

1. IOFAIL plex condition

2. NODAREC plex condition

3. NODEVICE plex condtion

4. RECOVER plex condition

5. REMOVED plex condition

Plex kernel states:

No user intervention is required to set these states, they are maintained internally. On a system that is operating properly, all plexes are enabled.

DETACHED plex kernel state:

- Maintenance is being performed on the plex.

- Any write request to the volume is not reflected in the plex.

- Read request from the volume is not satisfies from the plex.

DISABLED ples kernel state:

- The plex is offline & cannot be accessed.

ENABLED plex kernel state:

- Plex is online.

- Any write request to the volume is reflected on the plex.

- Read request from the volume is satisfied from the plex.

ADMINSTERING PLEX:

1. Creating the plex

2. Viewing the plexormation

3. Associating the plexh the volume

4. Dissociating the plex from the volume

5. Deleting the plex

Creating a plex:

Output:

bash-3.00# vxmake -g oradg plex oradgvol1-01 sd=oradg02-04,oradg02-05

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 - DISABLED - 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Viewing plex information:

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxplex -g oradg att oradgvolume oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxprint -g oradg -l oradgvol01-01

Plex: oradgvol01-01

info: len=2097152

type: layout=CONCAT

state: state= kernel=DISABLED io=read-write

assoc: vol=(dissoc) sd=oradg02-02

flags:

Associating plex to volume:

Output:

Case-1:

bash-3.00# vxplex -g oradg att oradgvolume oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Case-2:

bash-3.00# vxmake -g oradg -U fsgen vol oradgvolume plex=oradgvol01-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Dissociating/Deleting the plex:

Output:

Case-1:

bash-3.00# vxplex -g oradg det oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DETACHED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Case-2:

bash-3.00# vxplex -g oradg -o rm dis oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

1. Voulume manager uses sub-disks to build virtual objects called

PLEXES.

2. Is a structured or ordered collection on sub-disks from one or more vmdisk.

3. Cannot be shared by 2 volumes.

4. Maximum number of plexes per volumes is 32

5. Between 2 plexes of same volume mirroring occurs by default.

6. Can have minimum one sub-disk and maximum of 4096 sub-disks

7. 3 types of plexes

a. Complete plex: holds a complete copy of a volume

b. Log plex: dedicated to logging

c. Sparse plex: 1. which is not a compelete copy of the volume

2. Sparse plexes are not used in newer

versions fo voulme manager.

8. Can organixe data on sub-disks to form a plex by using the following

a. Concatenation

b. Striping

c. Mirroring

d. Striping with parity

Plex states:

1. If a disk with a particular plex located on it begins to fail, we can temporarily disable the plex.

2. Vxvm utilities automatically maintains the plex state.

Vxvm utilities uses the plex states for the following

a. Indicate whether volume contents have been initialized to a known state

b. Determine if a plex contains a valid copy (mirror) of the volume contents

c. Track whether a plex was in active use at the time of a system failure

d. Monitor operations of plex.

3. States of plex:

There are 15 states of plex available

a. Active plex state:

- On a system running level, ACTIVE should be the most common state, we can observe for any volume plex.

- Can be in the ACTIVE state by 2 ways

i. When the volume is started and the plex fully participates in normal volume I/O, the plex contents change as the contents of the volume change.

ii. When the volume is stopped as a result of system crash and the plex is ACTIVE at the moment of crash.

- In the later case, a system failure can leave plex contents in a consistent state. When a volume is started, Vxvm does the recovery action to guarantee that the contents of the plexes are marked as ACTIVE are made identical.

b. Clean plex state:

- Known to contain a consistent copy (mirror) of the volume contents & an operation has disable the volume.

c. DCOSNP plex state:

- Indicates that the DCOSNP (Data Change Object SNaPshot plex) attached to a volume can be used by a snapshot plex to create a DCO volume during snapshot operation.

d. Empty plex:

- Indicates that this plex are not initialized.

e. IOFAIL plex:

- Occurs when vxconfigd daemon detects an un correctable I/O error.

- It is likely that one/more of the disks associated with the plex to be replaced.

f. Log plex:

- The state of DRL – Dirty Region Log is always set to log plex.

g. Offline plex:

- Although the detached plex maintains its association with the volume, changes to the volume do not update the offline plex. The plex is not updated until the plex is put alive and re-attached.

h. SNAPATT plex:

- Indicates a snapshot plex that is being attached by snapshot (beginning) operation.

- Note: If the system fails before the attach completes, the plex & all of its sub-disks are removed.

i. SNAPDIS plex:

- Indicates a snapshot plex is fully attached.

j. SNAPDONE plex:

- Once the snapshot is completely achieved.

k. SNAPTMp plex:

- When a snapshot is being prepared on a volume.

l. STALE:

- If there is possibility that a plex does not have the complete & current volume contents, that plex is placed in STALE state.

- If an I/O error occurs on a plex, the kernel stops using & updating the contents of that plex and the state is STALE.

- Note 1: # vxplex att

Operation recovers the contents of a STALE plex from an ACTIVE plex.

- Note 2: # vxplex det

Root-user can executes the above command to bring the plex to STALE state.

m. TEMP plex:

- Indicates that the operation is incomplete.

n. TEMPRM plex:

- Similar to TEMP plex, but except that the completion of the operation, TEMPRM plex is removed.

o. TEMPRMSD plex:

- Occurs when attaching new data plexes to a volume.

- If the sync operation does not complete, the plex and its sub-disks are removed.

Plex condition flags:

1. IOFAIL plex condition

2. NODAREC plex condition

3. NODEVICE plex condtion

4. RECOVER plex condition

5. REMOVED plex condition

Plex kernel states:

No user intervention is required to set these states, they are maintained internally. On a system that is operating properly, all plexes are enabled.

DETACHED plex kernel state:

- Maintenance is being performed on the plex.

- Any write request to the volume is not reflected in the plex.

- Read request from the volume is not satisfies from the plex.

DISABLED ples kernel state:

- The plex is offline & cannot be accessed.

ENABLED plex kernel state:

- Plex is online.

- Any write request to the volume is reflected on the plex.

- Read request from the volume is satisfied from the plex.

ADMINSTERING PLEX:

1. Creating the plex

2. Viewing the plexormation

3. Associating the plexh the volume

4. Dissociating the plex from the volume

5. Deleting the plex

Creating a plex:

Output:

bash-3.00# vxmake -g oradg plex oradgvol1-01 sd=oradg02-04,oradg02-05

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 - DISABLED - 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Viewing plex information:

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxplex -g oradg att oradgvolume oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxprint -g oradg -l oradgvol01-01

Plex: oradgvol01-01

info: len=2097152

type: layout=CONCAT

state: state= kernel=DISABLED io=read-write

assoc: vol=(dissoc) sd=oradg02-02

flags:

Associating plex to volume:

Output:

Case-1:

bash-3.00# vxplex -g oradg att oradgvolume oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Case-2:

bash-3.00# vxmake -g oradg -U fsgen vol oradgvolume plex=oradgvol01-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 - DISABLED - 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DISABLED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Dissociating/Deleting the plex:

Output:

Case-1:

bash-3.00# vxplex -g oradg det oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol1-01 oradgvolume DETACHED EMPTY 8388608 CONCAT - RW

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Case-2:

bash-3.00# vxplex -g oradg -o rm dis oradgvol1-01

bash-3.00# vxprint -g oradg -pt

PL NAME VOLUME KSTATE STATE LENGTH LAYOUT NCOL/WID MODE

pl oradgvol01-01 oradgvolume DISABLED EMPTY 2097152 CONCAT - RW

pl vol1-01 vol1 ENABLED ACTIVE 4194304 CONCAT - RW

Wednesday, May 26, 2010

Branded Zones:

Branded zones are available beginning with the Solaris 10 8/07 release.

The Branded zone (BrandZ) provides the framework to create non-global branded zones that contain non-native operating environments used for running applications.

Every zone is configured with an associated zone. The default is the native brand, Solaris. A branded zone will support exactly one brand of non-native binary, which means that a branded zone provides a single operating environment.

Note :

1. Cannot run Solaris applications inside an lx zone. However, the lx zone enables us to use the Solaris system to develop[, test, and deploy Linux applications. For eg, we can place a Linux application in an lz zone and analyze it using Solaris tools run from the global zone.

2. We can change the brand of a zone in the configured state. Once a branded zone has been installed, that brand cannot be changed or moved.

3. The system must be either x64 or x86 based.

4. The lx (Linux) brand supports only the whole root model, so each installed zone will have its own copy of every file.

5. There are no limits on how much disk space can be consumed by a zone. The global administrator is responsible for space restriction.

6. Currently lx brand installer supports only Red Hat Enterprise Linux 3.x and the equivalent CentOS distributions.

http://hub.opensolaris.org/bin/view/Community+Group+brandz/downloads

we can download the CentOS tarball from the above link.

7. Regarding, assigning the name to be branded zone, rules is similar to a non-global zone.

Output:

1. To display the arch of the system, where brandz zones are implemented.

bash-3.00# arch

i86pc

2. To display the release of the Solaris Operating System

bash-3.00# cat /etc/release

Solaris 10 10/09 s10x_u8wos_08a X86

Copyright 2009 Sun Microsystems, Inc. All Rights Reserved.

Use is subject to license terms.

Assembled 16 September 2009

3. Configuring BrandZ zone:

bash-3.00# mkdir -m 700 /export/home/linux_brandz

bash-3.00# zonecfg -z linux_brandz

linux_brandz: No such zone configured

Use 'create' to begin configuring a new zone.

zonecfg:linux_brandz> create -t SUNWlx

zonecfg:linux_brandz> set zonepath=/export/home/linux_brandz

zonecfg:linux_brandz> add net

zonecfg:linux_brandz:net> set physical=e1000g0

zonecfg:linux_brandz:net> set address=100.0.0.123

zonecfg:linux_brandz:net> end

zonecfg:linux_brandz> commit

zonecfg:linux_brandz> exit

bash-3.00# zoneadm -z linux_brandz install -d /Desktop/centos_fs_image.tar.bz2

where

-d = specifies the path of the non-native OS tarball/image.

Installing zone 'linux_brandz' at root directory '/export/home/linux_brandz'

from archive '/Desktop/centos_fs_image.tar.bz2'

This process may take several minutes.

Setting up the initial lx brand environment.

System configuration modifications complete.

Setting up the initial lx brand environment.

System configuration modifications complete.

Installation of zone 'linux_brandz' completed successfully.

Details saved to log file:

"/export/home/linux_brandz/root/var/log/linux_brandz.install.1982.log"

bash-3.00# zoneadm list -iv

ID NAME STATUS PATH BRAND IP

0 global running / native shared

- linux_brandz installed /export/home/linux_brandz lx shared

bash-3.00# zoneadm -z linux_brandz boot

bash-3.00# zoneadm list -iv

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 linux_brandz running /export/home/linux_brandz lx shared

bash-3.00# zlogin linux_brandz

[Connected to zone 'linux_brandz' pts/4]

Welcome to your shiny new Linux zone.

- The root password is 'root'. Please change it immediately.

- To enable networking goodness, see /etc/sysconfig/network.example.

- This message is in /etc/motd. Feel free to change it.

For anything more complicated, see:

http://opensolaris.org/os/community/brandz/

You have mail.

-bash-2.05b# uname -a

Linux linux_brandz 2.4.21 BrandZ fake linux i686 i686 i386 GNU/Linux

-bash-2.05b# hostname

linux_brandz

Wow...!!! It works's, Fabulous.

Thursday, May 6, 2010

Solaris : Advanced Installation

JumpStart Installation:

There are 2 versions of JumpStart:

1. JumpStart : Automatically install the Solaris software on SPARC based system just be inserting the Solaris CD and powering on the system. Need not to specify the boot command at OK prompt. The software that is installed in specified a default class file that is chosen based on the system’s model and the size of its disks; can’t choose the software that is to be installed. For new SPARC systems shipped from Sun, this is the default method of installing the operating system when the system is first powered on.

2. Custom JumpStart : Method of installing the operating system provides a way to install groups of similar systems automatically and identically. At a large site with several systems that are to be configured exactly the same, this task can be monotonous and time consuming. In addition, there is no guarantee that each system is setup is same. Custom JumpStart solves this problem by providing a method to create sets of configuration files earlier, so that the installation process can use them to configure each system automatically.

Flash Installation :

A complete snapshot of a Solaris operating system, including with patches and applications.

Limitation: Used to perform an initial installation, Flash cannot be used to upgrade a system.

Flash archive:

Provides a method to store a snapshot of the Solaris operating system complete with all installed patches and applications. This archive can be stored on disk, Optical media or tape

media. This archive can be used for disaster recovery purposes or to replicate an environment on one or more other systems.

Solaris Live Upgrade:

Provides a method of upgrading a system whilst the system continues to operate.

While the current boot environment is running, it’s possible to duplicate the boot environment, and then upgrade the duplicate. Alternatively, rather than upgrading, it’s possible to install a Solaris Flash archive.

The original/current system configuration remains fully functional and unaffected by the upgrade or installation of an archive.

When ready, can activate the new boot environment by rebooting the system. If a failure occurs, can quickly revert to the original boot environment with a simple reboot.

Solaris Live Upgrade Process – Phase:

1. Creating an alternate boot environment (ABE) by cloning a current Solaris OS instance. The source for this cloning could also be a flash archive.

2. Changing the state of the system in the ABE for reasons including the following:

a. Upgrading to another OS release

b. Updating a release with patches or updates

3. Activating the new boot environment (BE).

4. Optionally falling back to the original BE.

Solaris Live Upgrade are supported by the following version:

Solaris 8 OS

Solaris 9 OS

Solaris 10 OS

Note - 1:

The only limitation to upgrading involves a Solaris Flash archive. When Solaris Flash archive is used to install, an archive that contains non-global zones are not properly installed on the system.

Note - 2:

Correct operation of Solaris Live Upgrade requires that a limited set of patch revisions be installed for a particular OS version. Before installing or running Solaris Live Upgrade, it’s required to install these patches.

Note - 3:

To estimate the file system size that is needed to create a boot environment, start the creation of a new boot environment. The size is calculated. Then abort the process.

Note - 4:

Solaris Live Upgrade packages are available from the release Solaris 10 05/09.

If the release is older, the packages can be installed by using the command # pkgadd.

Packages required:

SUNWlur

SUNWluu

SUNWucfg

Make a note that these packages MUST be installed in this order.

There are 2 versions of JumpStart:

1. JumpStart : Automatically install the Solaris software on SPARC based system just be inserting the Solaris CD and powering on the system. Need not to specify the boot command at OK prompt. The software that is installed in specified a default class file that is chosen based on the system’s model and the size of its disks; can’t choose the software that is to be installed. For new SPARC systems shipped from Sun, this is the default method of installing the operating system when the system is first powered on.

2. Custom JumpStart : Method of installing the operating system provides a way to install groups of similar systems automatically and identically. At a large site with several systems that are to be configured exactly the same, this task can be monotonous and time consuming. In addition, there is no guarantee that each system is setup is same. Custom JumpStart solves this problem by providing a method to create sets of configuration files earlier, so that the installation process can use them to configure each system automatically.

Flash Installation :

A complete snapshot of a Solaris operating system, including with patches and applications.

Limitation: Used to perform an initial installation, Flash cannot be used to upgrade a system.

Flash archive:

Provides a method to store a snapshot of the Solaris operating system complete with all installed patches and applications. This archive can be stored on disk, Optical media or tape

media. This archive can be used for disaster recovery purposes or to replicate an environment on one or more other systems.

Solaris Live Upgrade:

Provides a method of upgrading a system whilst the system continues to operate.

While the current boot environment is running, it’s possible to duplicate the boot environment, and then upgrade the duplicate. Alternatively, rather than upgrading, it’s possible to install a Solaris Flash archive.

The original/current system configuration remains fully functional and unaffected by the upgrade or installation of an archive.

When ready, can activate the new boot environment by rebooting the system. If a failure occurs, can quickly revert to the original boot environment with a simple reboot.

Solaris Live Upgrade Process – Phase:

1. Creating an alternate boot environment (ABE) by cloning a current Solaris OS instance. The source for this cloning could also be a flash archive.

2. Changing the state of the system in the ABE for reasons including the following:

a. Upgrading to another OS release

b. Updating a release with patches or updates

3. Activating the new boot environment (BE).

4. Optionally falling back to the original BE.

Solaris Live Upgrade are supported by the following version:

Solaris 8 OS

Solaris 9 OS

Solaris 10 OS

Note - 1:

The only limitation to upgrading involves a Solaris Flash archive. When Solaris Flash archive is used to install, an archive that contains non-global zones are not properly installed on the system.

Note - 2:

Correct operation of Solaris Live Upgrade requires that a limited set of patch revisions be installed for a particular OS version. Before installing or running Solaris Live Upgrade, it’s required to install these patches.

Note - 3:

To estimate the file system size that is needed to create a boot environment, start the creation of a new boot environment. The size is calculated. Then abort the process.

Note - 4:

Solaris Live Upgrade packages are available from the release Solaris 10 05/09.

If the release is older, the packages can be installed by using the command # pkgadd.

Packages required:

SUNWlur

SUNWluu

SUNWucfg

Make a note that these packages MUST be installed in this order.

Wednesday, April 21, 2010

Solaris : Veritas Volume Manager : Root Mirroring

Recommendations:

1. The disk which holds the Solaris Operating System and the disk where the

operating system is to be mirrored could be with same geometry.

2. Copy the /etc/system file and /etc/vfstab file as a precaution before

initiating the changes.

Condition:

1. The disk (which has the operating system) and the disk going to have the

mirror should be brought under the control of volume manager only with

Sliced layout.

2. Here the disk had been brought under the volume manager control as mentioned

above under the disk group named “ rootdg”

3. We have to bring the disk (which has the operating system) under the control

of volume manager through Encapsulation. This is done to preserve the data,

contained in the disk.

Output: Before bringing the disk under the volume manager control:

bash-3.00# vxdisk list

DEVICE TYPE DISK GROUP STATUS

c1t0d0s2 auto:none - - online invalid

c1t1d0s2 auto:none online invalid

c2t3d0s2 auto:simple - - online invalid

c2t5d0s2 auto:cdsdisk oradg1 oradg online

c2t9d0s2 auto:cdsdisk oradg2 oradg online

c2t10d0s2 auto:cdsdisk oradg3 oradg online

c2t12d0s2 auto:cdsdisk oradg4 oradg online

Steps followed:

# cp /etc/system /etc/system.orig.bkp

# cp /etc/vfstab /etc/vfstab.orig.bkp

# vxdiskadm

Choose the option: 2

Used to bring the disk under volume manager control through encapsulation. This utility will guide us, while bringing the disk under volume control bring it with Sliced layout, under the group “rootdg”.

Initially the disk group named “rootdg” will not exist. Create the same at time of initiating the disk.

Once the disk ( OS disk ) is brought under the control of volume manager, it requires to restart the system twice.

Once the system is restarted, we can observe the changes to the file /etc/system and /etc/vfstab.

Output : /etc/vfstab (After restarting the system twice)

bash-3.00# cat /etc/vfstab

#device device mount FS fsck mount mount

#to mount to fsck point type pass at boot options

#

fd - /dev/fd fd - no -

/proc - /proc proc - no -

/dev/vx/dsk/bootdg/swapvol - - swap - no nologging

/dev/vx/dsk/bootdg/rootvol /dev/vx/rdsk/bootdg/rootvol / ufs 1 no nologging

/devices - /devices devfs - no -

sharefs - /etc/dfs/sharetab sharefs - no -

ctfs - /system/contract ctfs - no -

objfs - /system/object objfs - no -

swap - /tmp tmpfs - yes -

#/dev/dsk/c1t1d0s3 /dev/rdsk/c1t1d0s3 /mnt/veritas ufs - yes -

#NOTE: volume rootvol (/) encapsulated partition c1t0d0s0

#NOTE: volume swapvol (swap) encapsulated partition c1t0d0s1

Note:

1. rootvol and swapvol should exist in the disk group that is chosen to be boot disk group.(Also, with name rootvol and swapvol, volumes can be created on other disk groups.) Only the rootvol and swapvol in bootdg can be used to boot the system.

2. bootdg is an alias for the disk group that contains the volumes that are used to boot the system.

3. Volume manager sets bootdg to the appropriate disk group, if it takes the control of the root disk.

Otherwise, bootdg is set to nodg (no disk group).

We can observe the changes by executing

# vxprint –vt

We have to bring the next disk under the volume manager control as above with sliced layout either by using

# vxdiskadm utility

or by executing

# vxdisksetup –i c1t1d0 format=sliced

Note:

Here system has to be restarted twice.

Output: After bringing both the disk under the disk group (rootdg) with sliced layout.

bash-3.00# vxdisk list

DEVICE TYPE DISK GROUP STATUS

c1t0d0s2 auto:sliced rootdg01 rootdg online

c1t1d0s2 auto:sliced rootdg02 rootdg online

c2t3d0s2 auto:simple - - online invalid

c2t5d0s2 auto:cdsdisk oradg1 oradg online

c2t9d0s2 auto:cdsdisk oradg2 oradg online

c2t10d0s2 auto:cdsdisk oradg3 oradg online

c2t12d0s2 auto:cdsdisk oradg4 oradg online

# vxmake –g rootdg mirror rootvol

will mirror the root.

The sync status can be observed in another terminal by executing

# vxtask list

This takes some time sync.

or

# vxmirror –g rootdg –a

This command, mirrors all existing volumes for the specified disk group.

Note:

# vxmirror

1. Provides a mechanism to mirror all non-mirrored volumes, that are located on

a specified disk, to mirror all currently non-mirrored volumes in the

specified disk group.

2. To change or display the current defaults for mirroring

3. All volumes that have only a single plex (mirror copy), are mirrored by

adding additional plex.

4. Volumes containing sub-disks that reside on MORE THAN ONE DISK ARE NOT

MIRRORED by this vxmirror command.

Similarly to mirror the swap,

# vxmake –g rootdg mirror swapvol

Outputs: After mirroring the root

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/vx/dsk/bootdg/rootvol

5.8G 5.3G 465M 93% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 2.0G 1.6M 2.0G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

sharefs 0K 0K 0K 0% /etc/dfs/sharetab

/platform/sun4u-us3/lib/libc_psr/libc_psr_hwcap1.so.1

5.8G 5.3G 465M 93% /platform/sun4u-us3/lib/libc_psr.so.1

/platform/sun4u-us3/lib/sparcv9/libc_psr/libc_psr_hwcap1.so.1

5.8G 5.3G 465M 93% /platform/sun4u-us3/lib/sparcv9/libc_psr.so.1

fd 0K 0K 0K 0% /dev/fd

swap 2.0G 120K 2.0G 1% /tmp

swap 2.0G 40K 2.0G 1% /var/run

swap 2.0G 0K 2.0G 0% /dev/vx/dmp

swap 2.0G 0K 2.0G 0% /dev/vx/rdmp

bash-3.00# vxprint -s

Disk group: rootdg

TY NAME ASSOC KSTATE LENGTH PLOFFS STATE TUTIL0 PUTIL0

sd rootdg01-B0 swapvol-01 ENABLED 1 0 - - Block0

sd rootdg01-01 swapvol-01 ENABLED 4097330 1 - - -

sd rootdg01-02 rootvol-01 ENABLED 12288402 0 - - -

sd rootdg02-01 rootvol-02 ENABLED 12288402 0 - - -

sd rootdg02-02 swapvol-02 ENABLED 4097331 0 - -

bash-3.00# vxprint -p

Disk group: rootdg

TY NAME ASSOC KSTATE LENGTH PLOFFS STATE TUTIL0 PUTIL0

pl rootvol-01 rootvol ENABLED 12288402 - ACTIVE - -

pl rootvol-02 rootvol ENABLED 12288402 - ACTIVE - -

pl swapvol-01 swapvol ENABLED 4097331 - ACTIVE - -

pl swapvol-02 swapvol ENABLED 4097331 - ACTIVE - -

bash-3.00# vxprint -v

Disk group: rootdg

TY NAME ASSOC KSTATE LENGTH PLOFFS STATE TUTIL0 PUTIL0

v rootvol root ENABLED 12288402 - ACTIVE - -

v swapvol swap ENABLED 4097331 - ACTIVE -

Note:

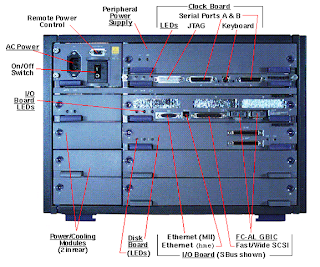

The image published here in this page - Sun E4500

Saturday, April 17, 2010

Solaris: Virtualization

Virtualization:

Virtualization means to create a virtual, or abstract, version of a physical devices or resource, such as a server, storage device, network or even operating systems, where the frame work divides the resource into one or more execution environments.

Zones:

1. A zone is a virtual environment that is created within a single running

instance of the Solaris Operating System.

2. Applications can run in an isolated and secure environment.

3. Even a privileged user in a zone cannot monitor or access process running in

a different environment.

4. Zone is a sub set of containers.

5. Runs on SPARC and X86 machines.

Containers:

1. Containers = Zones + Solaris Resource Management (SRM)

2. Container is a technology that comprises the resource management features,

such as resource pools and Solaris zones.

3. Remember Solaris containers use the same kernel.

Resource Management:

1. Is one of the integral components of Solaris 10 containers technology.

2. It allows us to do the following:

i. Allocate specific computer resources, such as CPU time & memory.

ii. Monitor how resource allocations are being used, and adjust the allocations when required.

iii. Generates more detailed accounting information.

iv. A new resource capping daemon (rcapd), regulate how much physical memory is used by a project. [Remember, a project can be a number of processes/users.]

v. Using resource management feature, multiple workloads can now be run on a single server, providing an isolated environment for each, so that one workload cannot affect the performance of the other.

vi. Resource Management feature of Solaris containers is extremely useful when a necessity of consolidating a number of applications to run on a single server.

Consolidation:

1. Reduces cost, complexity of having to manage numerous separate systems.

2. Consolidate applications onto fewer, larger, more scalable servers, and also

segregate the workload to restrict the resources that each can use.

LDOM:

1. Logical DOMain, technology allows to allocate a system’s various resources,

such as memory, CPUs & devices, into logical groupings and create multiple,

discrete system, each with their own operating system, resources, and

identify within a single computer systems.

2. Can be achieved by introducing a firmware layer.

3. Runs on SPARC based servers which supports hypervisor technology.

Additional:

Hypervisor:

1. Hypervisor is the layer between the operating system and the hardware.

2. Also called VMM (Virtual Machine Monitor), allows multiple operating systems

to run concurrently on a host computer.

3. Provides the guest operating systems with a virtual platform and monitors

the execution of the guest operating system.

4. The virtual machine approach using a hypervisor isolates failures in one

operating system from other operating systems sharing hardware.

Special Thanks to : Mr. T. Gurubalan, Sun Microsystems, My mentor for providing necessary information to blog this post.

Tuesday, April 6, 2010

Solaris: ZFS Administration: Disk Administration – Trouble Shooting

Solaris: ZFS Administration – Trouble Shooting

Once a disk is taken control by ZFS, then there is a possibility in the change of the disk layout.

For instance, earlier the disk c2t9d0 was under the control of ZFS.

Now once after removing the disk from ZFS control and trying to create a new slice (default – ufs), we face the following error.

OUTPUT TRUNCATED…

AVAILABLE DISK SELECTIONS:

0. c1t0d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w21000020371b6542,0

1. c1t1d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w21000020373e0ced,0

2. c2t3d0

/pci@8,700000/scsi@6,1/sd@3,0

3. c2t5d0

/pci@8,700000/scsi@6,1/sd@5,0

4. c2t9d0

/pci@8,700000/scsi@6,1/sd@9,0

5. c2t12d0

/pci@8,700000/scsi@6,1/sd@c,0

6. c2t15d0

/pci@8,700000/scsi@6,1/sd@f,0

Specify disk (enter its number): 4

Format> p

partition> p

Current partition table (original):

Total disk sectors available: 17672849 + 16384 (reserved sectors)

Part Tag Flag First Sector Size Last Sector

0 usr wm 256 8.43GB 17672849

1 unassigned wm 0 0 0

2 unassigned wm 0 0 0

3 unassigned wm 0 0 0

4 unassigned wm 0 0 0

5 unassigned wm 0 0 0

6 unassigned wm 0 0 0

8 reserved wm 17672850 8.00MB 17689233

partition>q

format>q

In that case, we can use the following command with the option –e

-e = Enables SCSI expert menu.

For eg: # format –e c2t9d0

OUTPUT:

bash-3.00# format -e c2t9d0

selecting c2t9d0

[disk formatted]

FORMAT MENU:

disk - select a disk

type - select (define) a disk type

partition - select (define) a partition table

current - describe the current disk

format - format and analyze the disk

repair - repair a defective sector

label - write label to the disk

analyze - surface analysis

defect - defect list management

backup - search for backup labels

verify - read and display labels

inquiry - show vendor, product and revision

scsi - independent SCSI mode selects

cache - enable, disable or query SCSI disk cache

volname - set 8-character volume name

!

quit

format>p

partition> modify

Select partitioning base:

0. Current partition table (original)

1. All Free Hog

Choose base (enter number) [0]? 1

Part Tag Flag First Sector Size Last Sector

0 usr wm 0 0 0

1 unassigned wm 0 0 0

2 unassigned wm 0 0 0

3 unassigned wm 0 0 0

4 unassigned wm 0 0 0

5 unassigned wm 0 0 0

6 unassigned wm 0 0 0

7 unassigned wm 0 0 0

8 reserved wm 0 0 0

Do you wish to continue creating a new partition

table based on above table[yes]?

Free Hog partition[6]?

Enter size of partition 0 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 1 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 2 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 3 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 4 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 5 [0b, 33e, 0mb, 0gb, 0tb]:

Enter size of partition 7 [0b, 33e, 0mb, 0gb, 0tb]:

Part Tag Flag First Sector Size Last Sector

0 unassigned wm 0 0 0

1 unassigned wm 0 0 0

2 unassigned wm 0 0 0

3 unassigned wm 0 0 0

4 unassigned wm 0 0 0

5 unassigned wm 0 0 0

6 usr wm 34 8.43GB 17672848

7 unassigned wm 0 0 0

8 reserved wm 17672849 8.00MB 17689232

Ready to label disk, continue? y

partition> l

[0] SMI Label

[1] EFI Label

Specify Label type[1]: 0

Warning: This disk has an EFI label. Changing to SMI label will erase all

current partitions.

Continue? y

Auto configuration via format.dat[no]?

Auto configuration via generic SCSI-2[no]?

partition> p

Current partition table (default):

Total disk cylinders available: 4924 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 root wm 0 - 73 129.75MB (74/0/0) 265734

1 swap wu 74 - 147 129.75MB (74/0/0) 265734

2 backup wu 0 - 4923 8.43GB (4924/0/0) 17682084

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 usr wm 148 - 4923 8.18GB (4776/0/0) 17150616

7 unassigned wm 0 0 (0/0/0) 0

partition>q

format>q

Note:

EFI - Extensible Firmware Interface (EFI), this is the default layout used by ZFS.

Wednesday, March 24, 2010

Linux: Assigning a password to GRUB

How to assign a password to GRUB after Linux OS is installed?

1. Login to the system as 'root' user.

2. Execute the command

bash-3.0# grub

Now we can observe the change in the prompt

Execute,

grub> md5crypt

This command will prompt for password. Type the password. It'll generate the encrypted password.

Output:

GNU GRUB version 0.97 (640K lower / 3072K upper memory)

[ Minimal BASH-like line editing is supported. For the first word, TAB

lists possible command completions. Anywhere else TAB lists the possible

completions of a device/filename.]

grub> md5crypt

Password: ******

Encrypted: $1$DDD2V/$MEJHXqlrloKA6gO1PCc3x1

3. Now make a note or copy the encrypted password, quit from grub.

grub> quit.

4. Now edit the file /etc/grub.conf as follows.

The password has to edited as follows:

Output:

bash-3.0# vi /etc/grub.conf

# grub.conf generated by anaconda

#

# Note that you do not have to rerun grub after making changes to this file

# NOTICE: You have a /boot partition. This means that

# all kernel and initrd paths are relative to /boot/, eg.

# root (hd0,0)

# kernel /vmlinuz-version ro root=/dev/sda2

# initrd /initrd-version.img

#boot=/dev/sda

default=0

timeout=5

splashimage=(hd0,0)/grub/splash.xpm.gz

password --md5 $1$DDD2V/$MEJHXqlrloKA6gO1PCc3x1

hiddenmenu

title Red Hat Enterprise Linux Server (2.6.18-8.el5)

root (hd0,0)

kernel /vmlinuz-2.6.18-8.el5 ro root=LABEL=/ rhgb quiet

initrd /initrd-2.6.18-8.el5.img

:wq!

5. Save & quite.

That's it.

Tuesday, March 16, 2010

Solaris: Autofs

The default time to automount the shared resource is 10 min (600 sec).

If the time has to be changed it can be performed by executing the following command,

bash-3.00# automount -t 15 –v

Where

-t = specifies the time to be mounted

-v = verbose mode

If the same has to be applied for all the shared resources on automounting, we can edit the following file,

bash-3.00# vi /etc/default/autofs | more

#ident "@(#)autofs 1.2 04/11/12 SMI"

#

# Copyright 2004 Sun Microsystems, Inc. All rights reserved.

# Use is subject to license terms.

#

# The duration in which a file system will remain idle before being

# unmounted. This is equivalent to the "-t" argument to automount.

AUTOMOUNT_TIMEOUT=700

(Output Truncated)

Solaris: How to customize the log settings to a flie?

Ex: Customizing the log setting to the file /var/adm/messages.

As we know, since the server is keep on running and log is more and updated to the file /var/adm/messages.

In case of limitation of disk space, we can rotate the log by having last 10 days log, specify in terms of weeks, and restrict the file size.

bash-3.00# ls -lh /var/adm | grep messages

-rw-r--r-- 1 root root 743K Mar 16 12:16 messages

Shows the file size in human readable format.

bash-3.00# logadm -S 10k /var/adm/messages

bash-3.00# ls -lh /var/adm| grep messages

-rw-r--r-- 1 root root 0 Mar 16 12:17 messages

After, restricting the file size to 10kb.

bash-3.00# logadm -A 10w /var/adm/messages

bash-3.00# ls -lh /var/adm/messages

-rw-r--r-- 1 root root 0 Mar 16 12:17 /var/adm/messages

After, restricting the file to have the log of last 10 weeks.

As we know, since the server is keep on running and log is more and updated to the file /var/adm/messages.

In case of limitation of disk space, we can rotate the log by having last 10 days log, specify in terms of weeks, and restrict the file size.

bash-3.00# ls -lh /var/adm | grep messages

-rw-r--r-- 1 root root 743K Mar 16 12:16 messages

Shows the file size in human readable format.

bash-3.00# logadm -S 10k /var/adm/messages

bash-3.00# ls -lh /var/adm| grep messages

-rw-r--r-- 1 root root 0 Mar 16 12:17 messages

After, restricting the file size to 10kb.

bash-3.00# logadm -A 10w /var/adm/messages

bash-3.00# ls -lh /var/adm/messages

-rw-r--r-- 1 root root 0 Mar 16 12:17 /var/adm/messages

After, restricting the file to have the log of last 10 weeks.

Saturday, March 13, 2010

Solaris: Daemons

Process Description:

init

The Unix program which spawns all other processes.

biod Works in cooperation with the remote nfsd to handle client NFS requests.

dhcpd

Dynamically configure TCP/IP information for clients.

fingerd

Provides a network interface for the finger protocol, as used by the finger command.

ftpd

Services FTP requests from a remote system. It listens at the port specified in the services file for ftp.

httpd

Web server daemon.

inetd

Listens for network connection requests. If a request is accepted, it can launch a background daemon to handle the request. Some systems use the replacement command xinetd.

lpd

The line printer daemon that manages printer spooling.

nfsd

Processes NFS operation requests from client systems. Historically each nfsd daemon handled one request at a time, so it was normal to start multiple copies.

ntpd

Network Time Protocol daemon that manages clock synchronization across the network. xntpd implements the version 3 standard of NTP.

rpcbind

Handles conversion of remote procedure calls (RPC), such as from ypbind.

sshd

Listens for secure shell requests from clients.

sendmail

SMTP daemon.

swapper Copies process regions to swap space in order to reclaim physical pages of memory for the kernel. Also called sched.

syslogd

System logger process that collects various system messages.

syncd

Periodically keeps the file systems synchronized with system memory.

xfsd

Serve X11 fonts to remote clients.

vhand

Releases pages of memory for use by other processes. Also known as the "page stealing daemon"

ypbind

Find the server for an NIS domain and store the information in a file.

Tuesday, March 9, 2010

Solaris: Security Administration

TO DENY THE USE OF A COMMAND /usr/bin/write COMMAND TO USERS:

1. Considering 2 existing users named malcomx and scbose.

2. Login in as above mentioned users through telnet.

Note:

/usr/bin/write command will send only the message whilst the user is logged in.

login: malcomx

Password:

Sun Microsystems Inc. SunOS 5.10 Generic January 2005

-bash-3.00$ id -a malcomx

uid=100(malcomx) gid=1(other) groups=1(other)

-bash-3.00$ write scbose

hi, good day

login: scbose

Password:

Sun Microsystems Inc. SunOS 5.10 Generic January 2005

-bash-3.00$

Message from malcomx on sunvm1 (pts/5) [ Tue Mar 9 12:22:11 ] ...

hi, good day

Now we observe that the message from the user malcomx is sent to the user scbose.

Now perform the following activity as “root” user:

bash-3.00# ls -l /usr/bin | grep write

-r-xr-sr-x 1 root tty 14208 Jan 23 2005 write

bash-3.00# chmod o-x /usr/bin/write

Removing the execution permission to others.

bash-3.00# ls -l /usr/bin|grep write

-r-xr-sr-- 1 root tty 14208 Jan 23 2005 write

Now checking with the user malcomx

-bash-3.00$ write scbose

-bash: /usr/bin/write: Permission denied

The user is denied since the permission-ship had been changed.

Now assigning SUID and SGID to the /usr/bin/write command.

bash-3.00# chmod 4554 /usr/bin/write

bash-3.00# chmod g+s /usr/bin/write

bash-3.00# ls -l /usr/bin|grep write

-r-sr-sr-- 1 root tty 14208 Jan 23 2005 write

Create a group (here “test”), assign password to the group (here test) and change the group to the command /usr/bin/write as follows:

bash-3.00# chgrp test /usr/bin/write

bash-3.00# ls -l /usr/bin|grep write

-r-sr-sr-- 1 root test 14208 Jan 23 2005 write

Now as the user malcomx

-bash-3.00$ write scbose

-bash: /usr/bin/write: Permission denied

Now move the group “test” by issuing the password,

-bash-3.00$ newgrp test

newgrp: Password:

bash-3.00$ write scbose

scbose is logged on more than one place.

You are connected to "pts/7".

Other locations are:

pts/8

it's works... fabulous...!

Now as scbose, we observe that the message had been sent from the user malcomx.

-bash-3.00$ id

uid=101(scbose) gid=1(other)

-bash-3.00$

Message from malcomx on sunvm1 (pts/5) [ Tue Mar 9 12:30:40 ] ...

it's works... fabulous...!

1. Considering 2 existing users named malcomx and scbose.

2. Login in as above mentioned users through telnet.

Note:

/usr/bin/write command will send only the message whilst the user is logged in.

login: malcomx

Password:

Sun Microsystems Inc. SunOS 5.10 Generic January 2005

-bash-3.00$ id -a malcomx

uid=100(malcomx) gid=1(other) groups=1(other)

-bash-3.00$ write scbose

hi, good day

login: scbose

Password:

Sun Microsystems Inc. SunOS 5.10 Generic January 2005

-bash-3.00$

Message from malcomx on sunvm1 (pts/5) [ Tue Mar 9 12:22:11 ] ...

hi, good day

Now we observe that the message from the user malcomx is sent to the user scbose.

Now perform the following activity as “root” user:

bash-3.00# ls -l /usr/bin | grep write

-r-xr-sr-x 1 root tty 14208 Jan 23 2005 write

bash-3.00# chmod o-x /usr/bin/write

Removing the execution permission to others.

bash-3.00# ls -l /usr/bin|grep write

-r-xr-sr-- 1 root tty 14208 Jan 23 2005 write

Now checking with the user malcomx

-bash-3.00$ write scbose

-bash: /usr/bin/write: Permission denied

The user is denied since the permission-ship had been changed.

Now assigning SUID and SGID to the /usr/bin/write command.

bash-3.00# chmod 4554 /usr/bin/write

bash-3.00# chmod g+s /usr/bin/write

bash-3.00# ls -l /usr/bin|grep write

-r-sr-sr-- 1 root tty 14208 Jan 23 2005 write

Create a group (here “test”), assign password to the group (here test) and change the group to the command /usr/bin/write as follows:

bash-3.00# chgrp test /usr/bin/write

bash-3.00# ls -l /usr/bin|grep write

-r-sr-sr-- 1 root test 14208 Jan 23 2005 write

Now as the user malcomx

-bash-3.00$ write scbose

-bash: /usr/bin/write: Permission denied

Now move the group “test” by issuing the password,

-bash-3.00$ newgrp test

newgrp: Password:

bash-3.00$ write scbose

scbose is logged on more than one place.

You are connected to "pts/7".

Other locations are:

pts/8

it's works... fabulous...!

Now as scbose, we observe that the message had been sent from the user malcomx.

-bash-3.00$ id

uid=101(scbose) gid=1(other)

-bash-3.00$

Message from malcomx on sunvm1 (pts/5) [ Tue Mar 9 12:30:40 ] ...

it's works... fabulous...!

Monday, March 1, 2010

Solaris: Troubleshooting: NIS

Sometimes when we try to create an user account, soon after deleting our NIS domain, we may face the following error.

Output for kind ref:

bash-3.00# useradd -m -d /export/home/lingesh lingesh

64 blocks

bash-3.00# passwd lingesh

New Password:

Re-enter new Password:

Permission denied

This shows that, the user account can be created, where as password cannot be assigned to the newly created user account.

In this case we have to follow the following step.

bash-3.00# cp /etc/nsswitch.files /etc/nsswitch.conf

The above command replace /etc/nsswitch.conf to the default template.

Output for kind ref:

bash-3.00# passwd lingesh

New Password:

Re-enter new Password:

passwd: password successfully changed for lingesh

Output for kind ref:

bash-3.00# useradd -m -d /export/home/lingesh lingesh

64 blocks

bash-3.00# passwd lingesh

New Password:

Re-enter new Password:

Permission denied

This shows that, the user account can be created, where as password cannot be assigned to the newly created user account.

In this case we have to follow the following step.

bash-3.00# cp /etc/nsswitch.files /etc/nsswitch.conf

The above command replace /etc/nsswitch.conf to the default template.

Output for kind ref:

bash-3.00# passwd lingesh

New Password:

Re-enter new Password:

passwd: password successfully changed for lingesh

Tuesday, February 23, 2010

Solaris: ZFS Administration

ZFS has been designed to be robust, scalable and simple to administer.

ZFS pool storage features:

ZFS eliminates the volume management altogether. Instead of forcing us to create virtual volumes, ZFS aggregates devices into a storage pool. The storage pool describes the physical characteristics of the storage (device layout, data redundancy, and so on,) and acts as arbitrary data store from which the file systems can be created.

File systems grow automatically within the space allocated to the storage pool.

ZFS is a transactional file system, which means that the file system state is always consistent on disk. With a transactional file system, data is managed using coy on write semantics.

ZFS supports storage pools with varying levels of data redundancy, including mirroring and a variation on RAID-5. When a bad data block is detected, ZFS fetches the correct data from another replicated copy, and repairs the bad data, replacing it with the good copy.

The file system itself if 128-bit, allowing for 256 quadrillion zettabytes of storage. Directories ca have up to 2 to the power of 48 (256 trillion) entries, and no limit exists on the number of file systems or number of files that can be contained within a file system.

A snapshot is a read-only copy of a file system or volume. Snapshots can be created quickly and easily. Initially, snapshots consume no additional space within the pool.

Clone – A file system whose initial contents are identical to the contents of a snapshot.

ZFS component Naming requirements:

Each ZFS component must be named according to the following rules;

1. Empty components are not allowed.

2. Each component can only contain alphanumeric characters in addition to the following 4 special characters:

a. Underscore (_)

b. Hyphen (-)

c. Colon (: )

d. Period (.)

3. Pool names must begin with a letter, expect that the beginning sequence c(0-9) is not allowed (this is because of the physical naming convention). In addition, pool names that begin with mirror, raid z, or spare are not allowed as these name are reserved.

4. Data set names must begin with an alphanumeric character.

ZFS Hardware and Software requirements and recommendations:

1. A SPARC or X86 system that is running the Solaris 10 6/06 release or later release.

2. The minimum disk size is 128 M bytes. The minimum amount of disk space required for a storage pool is approximately 64 Mb.

3. The minimum amount of memory recommended to install a Solaris system is 512 Mb. However, for good ZFS performance, at least 1 Gb or more of memory is recommended.

4. Whilst creating a mirrored disk configuration, multiple controllers are recommended.

ZFS Steps:

zpool create

zpool add

zpool remove

zpool attach

zpool detach

zpool destroy

zpool list

zpool status

zpool replace

zfs create

zfs destroy

zfs snapshot

zfs rollback

zfs clone

zfs list

zfs set

zfs get

zfs mount

zfs unmount

zfs share

zfs unshare

Output: Creating a zpool:

bash-3.00# zpool create testpool c2d0s7

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testpool 2G 77.5K 2.00G 0% ONLINE -

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.3G 53% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.3G 728K 3.3G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.3G 53% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.3G 48K 3.3G 1% /tmp

swap 3.3G 32K 3.3G 1% /var/run

testpool 2.0G 24K 2.0G 1% /testpool

Output: Creating a directory under a zpool:

bash-3.00# zfs create testpool/homedir

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.3G 53% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.3G 728K 3.3G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.3G 53% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.3G 48K 3.3G 1% /tmp

swap 3.3G 32K 3.3G 1% /var/run

testpool 2.0G 25K 2.0G 1% /testpool

testpool/homedir 2.0G 24K 2.0G 1% /testpool/homedir

bash-3.00# mkfile 100m testpool/homedir/newfile

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.1G 54% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.1G 728K 3.1G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.1G 54% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.1G 48K 3.1G 1% /tmp

swap 3.1G 32K 3.1G 1% /var/run

testpool 2.0G 25K 1.9G 1% /testpool

testpool/homedir 2.0G 100M 1.9G 5% /testpool/homedir

Mirror:

bash-3.00# zpool create testmirrorpool mirror c2d0s3 c2d0s4

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testmirrorpool 4.97G 52.5K 4.97G 0% ONLINE -

testpool 2G 100M 1.90G 4% ONLINE -

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.1G 54% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.1G 736K 3.1G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.1G 54% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.1G 48K 3.1G 1% /tmp

swap 3.1G 32K 3.1G 1% /var/run

testpool 2.0G 25K 1.9G 1% /testpool

testpool/homedir 2.0G 100M 1.9G 5% /testpool/homedir

testmirrorpool 4.9G 24K 4.9G 1% /testmirrorpool

bash-3.00# cat /etc/mnttab

#device device mount FS fsck mount mount

#to mount to fsck point type pass at boot options

#

testpool /testpool zfs rw,devices,setuid,exec,atime,dev=2d50002 125 8087961

testpool/homedir /testpool/homedir zfs rw,devices,setuid,exec,atime,dev=2d 50003 1258088096

testmirrorpool /testmirrorpool zfs rw,devices,setuid,exec,atime,dev=2d50004 125 8089634

DESTROYING A POOL:

bash-3.00# zpool destroy testmirrorpool

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testpool 2G 100M 1.90G 4% ONLINE -

MANAGING ZFS PROPERTIES:

bash-3.00# zfs get all testpool/homedir

NAME PROPERTY VALUE SOURCE

testpool/homedir type filesystem -

testpool/homedir creation Sat Nov 14 11:34 2009 -

testpool/homedir used 24.5K -

testpool/homedir available 4.89G -

testpool/homedir referenced 24.5K -

testpool/homedir compressratio 1.00x -

testpool/homedir mounted yes -

testpool/homedir quota none default

testpool/homedir reservation none default

testpool/homedir recordsize 128K default

testpool/homedir mountpoint /testpool/homedir default

testpool/homedir sharenfs off default

testpool/homedir checksum on default

testpool/homedir compression off default

testpool/homedir atime on default

testpool/homedir devices on default

testpool/homedir exec on default

testpool/homedir setuid on default

testpool/homedir readonly off default

testpool/homedir zoned off default

testpool/homedir snapdir hidden default

testpool/homedir aclmode groupmask default

testpool/homedir aclinherit secure default

bash-3.00# zfs set quota=500m testpool/homedir

bash-3.00# zfs set compression=on testpool/homedir

bash-3.00# zfs set mounted=no testpool/homedir

cannot set mounted property: read only property

bash-3.00# zfs get all testpool/homedir

NAME PROPERTY VALUE SOURCE

testpool/homedir type filesystem -

testpool/homedir creation Sat Nov 14 11:34 2009 -

testpool/homedir used 24.5K -

testpool/homedir available 500M -

testpool/homedir referenced 24.5K -

testpool/homedir compressratio 1.00x -

testpool/homedir mounted yes -

testpool/homedir quota 500M local

testpool/homedir reservation none default

testpool/homedir recordsize 128K default

testpool/homedir mountpoint /testpool/homedir default

testpool/homedir sharenfs off default

testpool/homedir checksum on default

testpool/homedir compression on local

testpool/homedir atime on default

testpool/homedir devices on default

testpool/homedir exec on default

testpool/homedir setuid on default

testpool/homedir readonly off default

testpool/homedir zoned off default

testpool/homedir snapdir hidden default

testpool/homedir aclmode groupmask default

testpool/homedir aclinherit secure default

INHERITING ZFS PROPERTIES:

bash-3.00# zfs get -r compression testpool

NAME PROPERTY VALUE SOURCE

testpool compression off default

testpool/homedir compression on local

testpool/homedir/nesteddir compression on local

bash-3.00# zfs inherit compression testpool/homedir

bash-3.00# zfs get -r compression testpool

NAME PROPERTY VALUE SOURCE

testpool compression off default

testpool/homedir compression off default

testpool/homedir/nesteddir compression on local

bash-3.00# zfs inherit -r compression testpool/homedir

bash-3.00# zfs get -r compression testpool

NAME PROPERTY VALUE SOURCE

testpool compression off default

testpool/homedir compression off default

testpool/homedir/nesteddir compression off default

QUERYING ZFS PROPERTIES:

bash-3.00# zfs get checksum testpool/homedir

NAME PROPERTY VALUE SOURCE

testpool/homedir checksum on default

bash-3.00# zfs get all testpool/homedir

NAME PROPERTY VALUE SOURCE

testpool/homedir type filesystem -

testpool/homedir creation Sat Nov 14 11:34 2009 -

testpool/homedir used 50K -

testpool/homedir available 500M -

testpool/homedir referenced 25.5K -

testpool/homedir compressratio 1.00x -

testpool/homedir mounted yes -

testpool/homedir quota 500M local

testpool/homedir reservation none default

testpool/homedir recordsize 128K default

testpool/homedir mountpoint /testpool/homedir default

testpool/homedir sharenfs off default

testpool/homedir checksum on default

testpool/homedir compression off default

testpool/homedir atime on default

testpool/homedir devices on default

testpool/homedir exec on default

testpool/homedir setuid on default

testpool/homedir readonly off default

testpool/homedir zoned off default

testpool/homedir snapdir hidden default

testpool/homedir aclmode groupmask default

testpool/homedir aclinherit secure default

bash-3.00# zfs get -s local all testpool/homedir

NAME PROPERTY VALUE SOURCE

testpool/homedir quota 500M local

RAID-Z POOL:

bash-3.00# zpool create testraid5pool raidz c2d0s3 c2d0s4 c2d0s5

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testpool 2G 100M 1.90G 4% ONLINE -

testraid5pool 14.9G 89K 14.9G 0% ONLINE -

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.1G 54% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.1G 736K 3.1G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.1G 54% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.1G 48K 3.1G 1% /tmp

swap 3.1G 32K 3.1G 1% /var/run

testpool 2.0G 25K 1.9G 1% /testpool

testpool/homedir 2.0G 100M 1.9G 5% /testpool/homedir

testraid5pool 9.8G 32K 9.8G 1% /testraid5pool

DOUBLE PARITY RAID-Z POOL:

bash-3.00# zpool create doubleparityraid5pool raidz2 c2d0s3 c2d0s4 c2d0s5

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

doubleparityraid5pool 14.9G 158K 14.9G 0% ONLINE -

testpool 2G 100M 1.90G 4% ONLINE -

bash-3.00# df -h

Filesystem size used avail capacity Mounted on

/dev/dsk/c1d0s0 20G 10G 9.1G 54% /

/devices 0K 0K 0K 0% /devices

ctfs 0K 0K 0K 0% /system/contract

proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

swap 3.1G 736K 3.1G 1% /etc/svc/volatile

objfs 0K 0K 0K 0% /system/object

/usr/lib/libc/libc_hwcap2.so.1

20G 10G 9.1G 54% /lib/libc.so.1

fd 0K 0K 0K 0% /dev/fd

swap 3.1G 48K 3.1G 1% /tmp

swap 3.1G 32K 3.1G 1% /var/run

testpool 2.0G 25K 1.9G 1% /testpool

testpool/homedir 2.0G 100M 1.9G 5% /testpool/homedir

doubleparityraid5pool 4.9G 24K 4.9G 1% /doubleparityraid5pool

DRY RUN OF STORAGE POOL CREATION:

bash-3.00# zpool create -n testmirrorpool mirror c2d0s3 c2d0s4

would create 'testmirrorpool' with the following layout:

testmirrorpool

mirror

c2d0s3

c2d0s4

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testpool 2G 100M 1.90G 4% ONLINE -

bash-3.00# df

/ (/dev/dsk/c1d0s0 ):19485132 blocks 2318425 files

/devices (/devices ): 0 blocks 0 files

/system/contract (ctfs ): 0 blocks 2147483612 files

/proc (proc ): 0 blocks 16285 files

/etc/mnttab (mnttab ): 0 blocks 0 files

/etc/svc/volatile (swap ): 6598720 blocks 293280 files

/system/object (objfs ): 0 blocks 2147483444 files

/lib/libc.so.1 (/usr/lib/libc/libc_hwcap2.so.1):19485132 blocks 2318425 files

/dev/fd (fd ): 0 blocks 0 files

/tmp (swap ): 6598720 blocks 293280 files

/var/run (swap ): 6598720 blocks 293280 files

/testpool (testpool ): 3923694 blocks 3923694 files

/testpool/homedir (testpool/homedir ): 3923694 blocks 3923694 files

Note: Here the –n option is used not to create a zpool but just to check if it is possible to create it or not. If it is possible, it’ll give the above output, else it’ll give the error which is expected to occur when creating the zpool.

LISTING THE POOLS AND ZFS:

bash-3.00# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

testmirrorpool 4.97G 52.5K 4.97G 0% ONLINE -

testpool 2G 100M 1.90G 4% ONLINE -

bash-3.00# zpool list -o name,size,health

NAME SIZE HEALTH

testmirrorpool 4.97G ONLINE

testpool 2G ONLINE

bash-3.00# zpool status -x

all pools are healthy

bash-3.00# zpool status -x testmirrorpool

pool 'testmirrorpool' is healthy

bash-3.00# zpool status -v

pool: testmirrorpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

testmirrorpool ONLINE 0 0 0

mirror ONLINE 0 0 0

c2d0s3 ONLINE 0 0 0

c2d0s4 ONLINE 0 0 0

errors: No known data errors

pool: testpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

testpool ONLINE 0 0 0

c2d0s7 ONLINE 0 0 0

errors: No known data errors

bash-3.00# zpool status -v testmirrorpool

pool: testmirrorpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

testmirrorpool ONLINE 0 0 0

mirror ONLINE 0 0 0

c2d0s3 ONLINE 0 0 0

c2d0s4 ONLINE 0 0 0

errors: No known data errors

bash-3.00# zfs list

NAME USED AVAIL REFER MOUNTPOINT

testmirrorpool 75.5K 4.89G 24.5K /testmirrorpool